IMU Gesture Recognition and Tracking

INTRODUCTION

In today’s world, there is an increasing need to provide accurate indoor location based services. These services are important in instances such as security and tracking in densely populated areas and patient monitoring in hospitals and retirement homes. It can also be applied to manufacturing or shipping lines to increase quality and reliability by providing asset tracking.

In these situations, Wi-Fi devices such as cellphones are widely used and Wi-Fi access points are abundant. Repurposing these devices for indoor tracking reduces system cost but present problems such as reduced performance where signals are weak and due to device placement. Weak signals can cause dead spots in tracking and device placement, for example the location of a cellphone on a person’s body, can greatly vary the Wi-Fi signal strength.

The objective of this research was to develop a low-cost, low-energy system using an inertial measurement unit (IMU) to accurately and efficiently track an individual’s gesture and activity, to augment the indoor tracking system’s performance. The IMU provided short term trajectory to fill in dead zones as well as device orientation and activity to dynamically modify tracking parameters. This IMU consisted of accelerometers, gyroscopes, and magnetometers found in an Android tablet. The IMU data along with location data was sent to a central server for processing to reduce computational and energy requirements client side.

DESIGN

Android App

The indoor tracking system uses a central server to track the location of various clients, with clients like tablets and cellphones being most prone to interference. I decided to use an Nvidia Shield Tablet as my development platform and created two Android apps to send basic sensor information through UDP to the server. One app sends only accelerometer, gyroscope, and magnetometer data to test the gesture and recognition system while the other adds Wi-Fi RSS data to be used for tracking.

IMU App

IMU+RSS App

Server-Side Development

I decided to use only basic accelerometer, gyroscope, and magnetometer data to determine gesture and activity. This reduces processing power required on the client and removes the reliance on Android functions, making the system universal. These algorithms were coded in Matlab.

The system uses IMU Attitude and Short Trajectory Estimation algorithms as the building blocks to determine the IMU Orientation, Gesture, and Activity.

IMU Attitude

The attitude of the Android tablet provides a general outline of the user’s gesture and activity. Pitch and roll of the device is computed from the accelerometer data, which are sufficient enough to give an initial classification of an individual’s gesture and activity. Heading can be calculated with the addition of magnetometer data, allowing ease of integration to existing indoor location systems as the movements in the device’s Body Frame can be transformed into the Earth Frame.

IMU Short Trajectory Estimation

The IMU’s accelerometer and gyroscope are used to determine the trajectory of the tablet for short periods of time. This allows the estimated trajectory to be relatively accurate as the accumulation of drift error is not prominent for short periods. The MahonyAHRS algorithm to determine the rotation matrices in relation to the Earth Frame, then compensating for tilt and removing gravity, finally double integrating to find displacement.

Flow Diagram of the IMU Short Trajectory Estimation algorithm

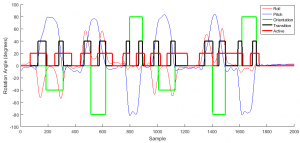

IMU Orientation + Gesture

The orientation and gesture of the IMU can be fully described through combining the IMU attitude and trajectory. When the system starts, the pitch and roll of the IMU are recorded as initial conditions and variations in the pitch and roll are monitored. If a large change is detected and a new orientation is maintained, the instant when the new orientation is acquired and the duration of the transition period are determined. Based on the initial and final attitudes, the movement is initially classified then verified by computing the trajectory during the transition period.

The attitude and transition motion are used to describe the tablet’s true orientation and gesture, enabling the system to determine where the tablet is on the user.

IMU Activity Detection

The activity of the user can be determined by processing the raw IMU data with the addition of orientation and gesture data. Active periods are initially determined by filtering the accelerometer magnitude using a high-pass then low-pass filter. These active periods are then categorized into walking periods or orientation transitioning periods.